Responsible AI

A Better Understanding of Automation Risk

It is important to consider why AI risks are not limited to the AI models alone or the data upon which they are built. To successfully manage these risks at scale, project teams must evaluate the entire AI ecosystem and the complete lifecycle of everything contained within it. This process must have a well-designed operating model and standards that are grounded on a clear governance strategy and quality control processes to ensure relevance, reliability and information security.

How do you address these risks meaningfully? The answer is through a responsible AI program that aims to develop, build, and deploy predictive systems in a trustworthy, safe and ethical manner — so that companies can accelerate their value with confidence.

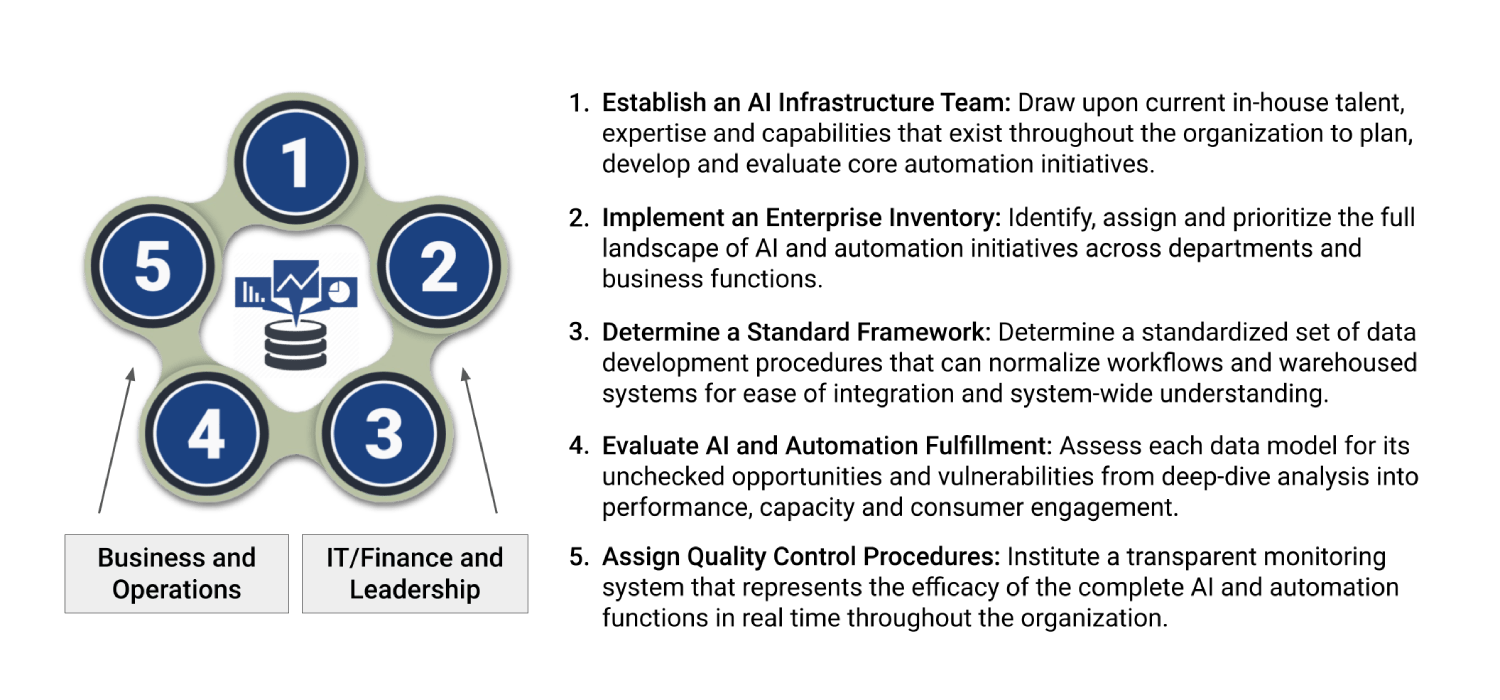

Five Tactical Steps for AI Accountability

To drive strategic decisions across a business unit, organizations can evaluate both the benefits and risks of intelligent automation — including where each of these elements touch critical processes for hidden opportunities and vulnerabilities.

Data in business applications is growing exponentially. It's not just being utilized to store objects and stack user-centric algorithms — effectively set to "remember things" or unlock patterns to streamline operations — but also to analyze content, create context and make better decisions.

66% of companies without an AI risk function today,

Plan to have one in the next three years.Responsible AI helps your organization craft a dimensional use of this data in an "analytically operational" manner to power machine learning applications.

Three Practical Model Sets

-

Operational Data

The strategic warehouses that capture most of the information on the internal functions and processes of a business for customers, stakeholders and more.

-

Analytical Data

The routine collection, transformation and organization of data in order to draw conclusions, make predictions and drive informed decision making.

-

Analytically Operational Data

The convergence of operational and analytical data to ensure the best decision is achieved every time with explainable outcomes and complete visibility.

Factors that Drive Reliability in data modeling

Detecting and preventing errors can be challenging — even with full access to both the model and the data it uses. Identifying risk and reviewing predictive models was once reserved for IT back-office channels or hired consultants, but that phased approach has drawn to a close.

Data Integrity

Is each dataset using the right or best source of information and is it complete?

Statistical Clarity

Does the model reflect what it was designed to measure and are its variables clear?

Model Accuracy

How often does the model produce correct results and can they be verified?

Transparency

Do all stakeholders understand and agree with how these predictions are made?

Impartiality

Is inadvertent discrimination present based on gender, race, existing biases, etc.?

Resiliency

Can the model be corrupted by unintentional data collection or conditional changes?

Subscribe and get more insights today!

Never miss an update, receive notifications of emerging trends and analysis.